Spiking HM

Created Thursday 30 May 2019

Neuromorphic computation

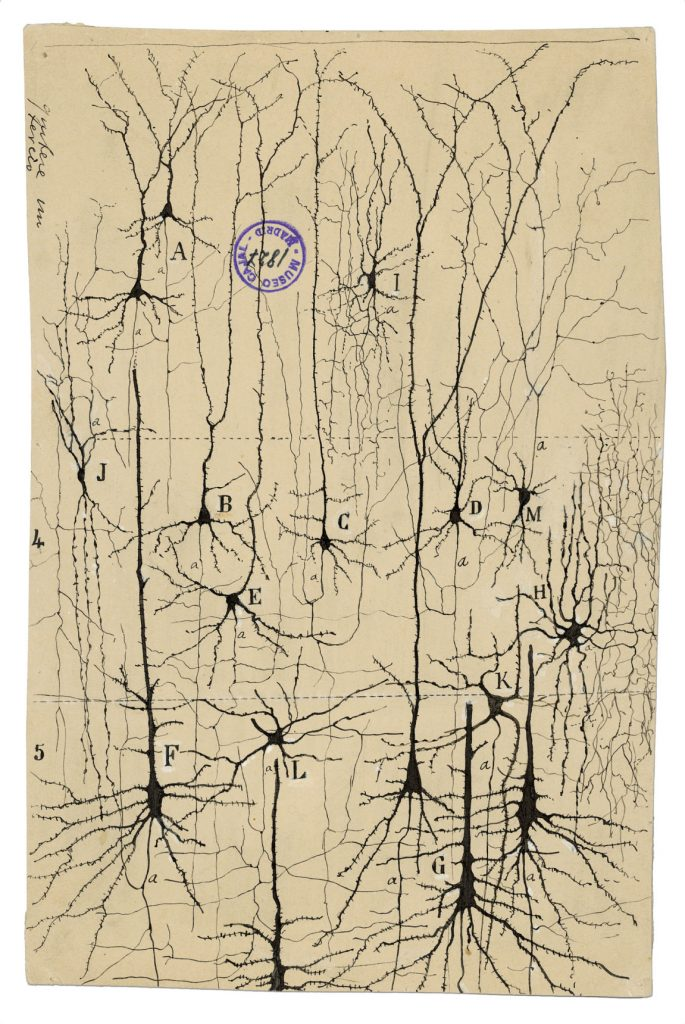

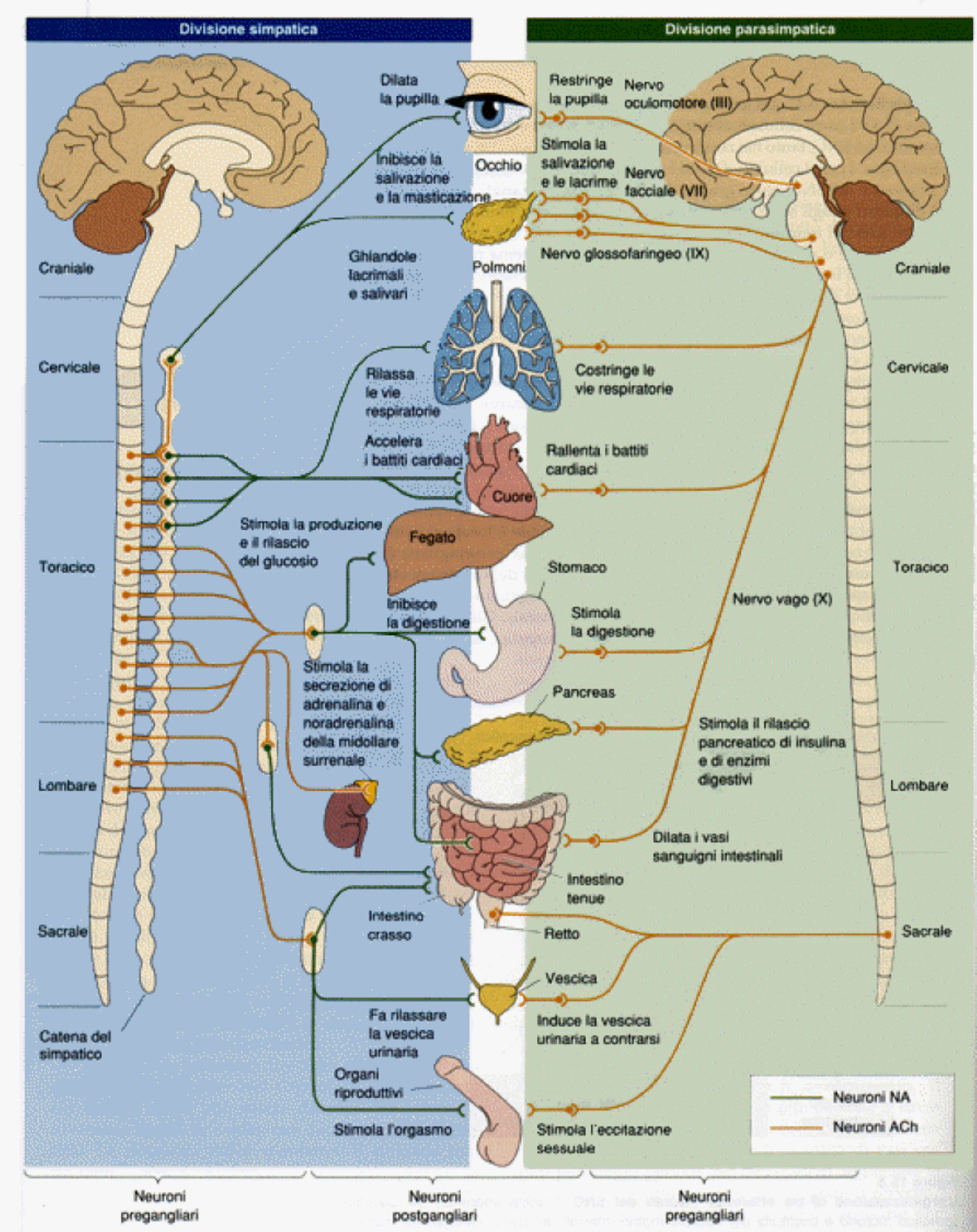

- Neuroni, se li conosci li eviti

- Algoritmi per Reti Neurali Impennanti

- Tre chips

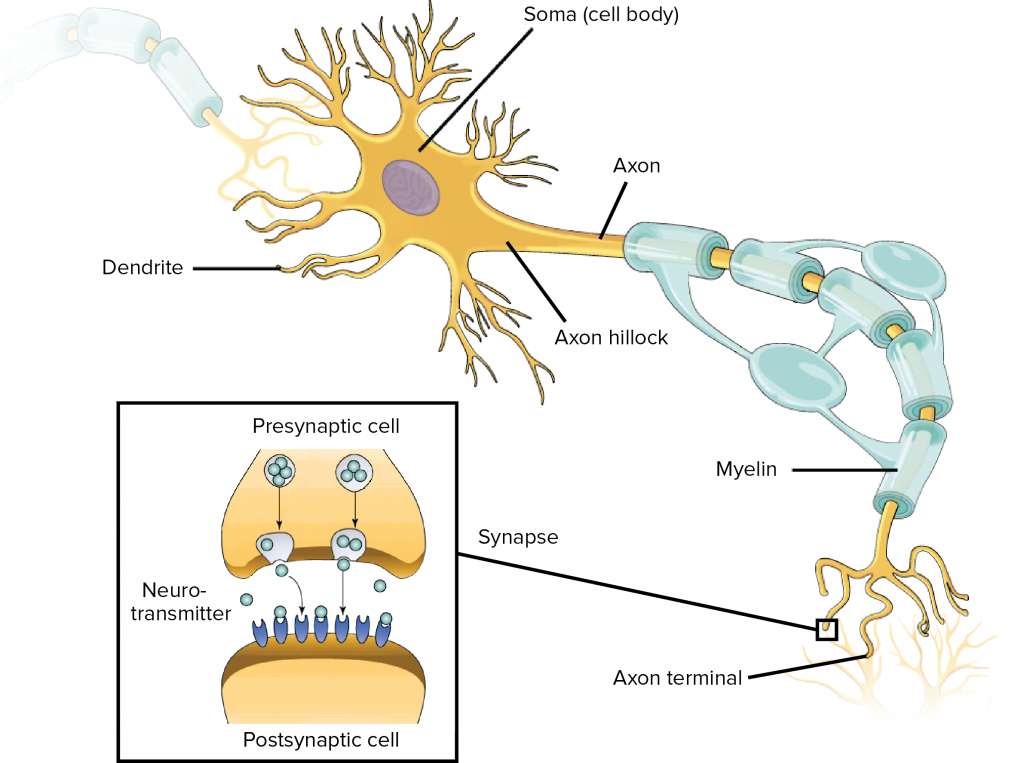

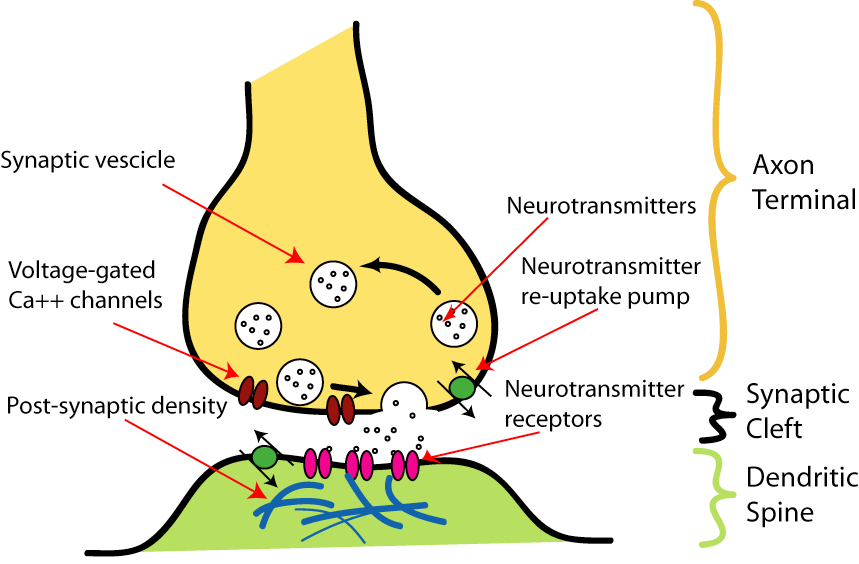

Sinapsi

Sistemi dinamici

- Un set di equazioni differenziali

- Una matrice di connettività

- Descrizione matematica delle sinapsi

Learning

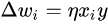

Caso semplice, Hebbian: "fire together wire together":

Caso difficile STDP → plasticità dendriti, LTP, scaling...

Ma anche:

- Adattamento neuronale (canali ionici)

- Pruning

Plasticità

La verità

Algoritmi

Un po di idee

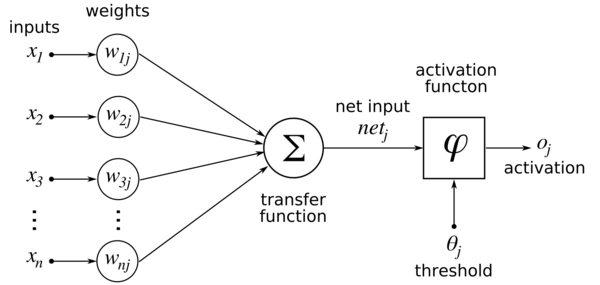

- Computazione: Trasferimento, processamento e stoccaggio di formazione.

- -> Memoria, funzione di trasferimento etc..

Poi volendo parliamo di cognizione e machine learning

Machine Learning

Reservoir Computing

backprop

E-prop

Turing cose

A Neural Turing machine (NTMs) is a recurrent neural network model published by Alex Graves et. al. in 2014.[1] NTMs combine the fuzzy pattern matching capabilities of neural networks with the algorithmic power of programmable computers. An NTM has a neural network controller coupled to external memory resources, which it interacts with through attentional mechanisms. The memory interactions are differentiable end-to-end, making it possible to optimize them using gradient descent.[2] An NTM with a long short-term memory (LSTM) network controller can infer simple algorithms such as copying, sorting, and associative recall from input and output examples.[1] They can infer algorithms from input and output examples alone.

https://en.wikipedia.org/wiki/Neural_Turing_machine

https://en.wikipedia.org/wiki/Recurrent_neural_network

Conti

1+11 =

23*5 =

25*77 =

2346 - 1352353 =

19*3245325=

Test

Rapido

Chi è?

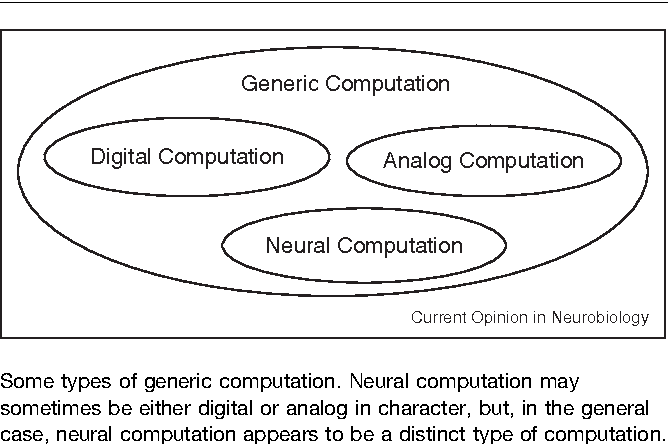

In generale

Quindi il problema non è di potere computazionale, gli spiking neural network non si sa bene cosa possono computare!

Sono super turing? che vuol dire?

La cognizione "animale" è super turing? è un'altra cosa?

Neuromorphic devices

\

\

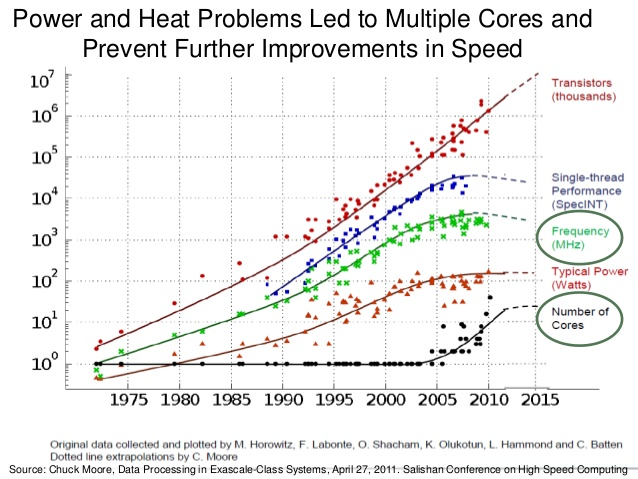

Moore's law

Dennard scaling, also known as MOSFET scaling [bla bla 1974], states, roughly, that as transistors get smaller, their power density stays constant, so that the power use stays in proportion with area; both voltage and current scale (downward) with length.

Tempo/Spazio/Energia

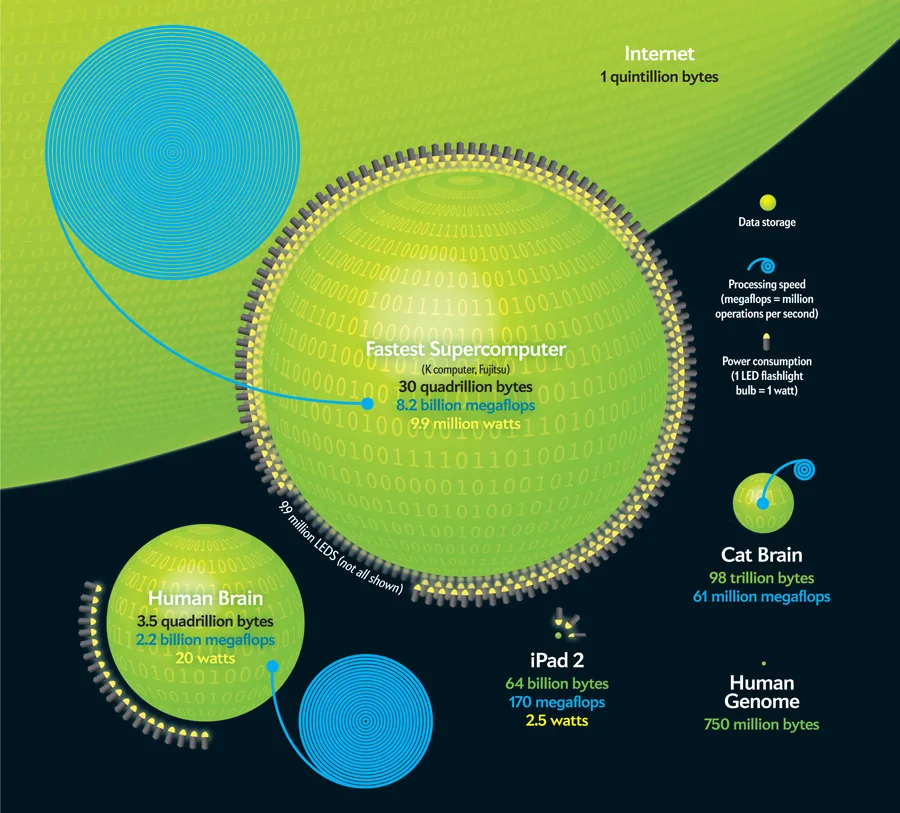

Note that a “human-scale” simulation with 100 trillion synapses (with relatively simple models of neurons and synapses)

required 96 Blue Gene/Q racks of the Lawrence Livermore National Lab Sequoia supercomputer—and, yet, the simulation ran 1,500 times slower than real-time.

A hypothetical computer to run this simulation in real-time would require 12GW, whereas the human brain consumes merely 20W.

Consumo machine learning

True North

https://www.research.ibm.com/artificial-intelligence/experiments/try-our-tech/

http://www.lescienze.it/news/2014/08/11/news/chip_reti_neurali_cervello_consumi_ridotti_efficienza_truenorth-2245681/

https://en.wikipedia.org/wiki/TrueNorth

Brain Drop

Braindrop is the first neuromorphic system designed to be programmed at a high level of abstraction. Previous neuromorphic systems were programmed at the neurosynaptic level and required expert knowledge of the hardware to use. In stark contrast, Braindrop's computations are specified as coupled nonlinear dynamical systems and synthesized to the hardware by an automated procedure. This procedure not only leverages Braindrop's fabric of subthreshold analog circuits as dynamic computational primitives but also compensates for their mismatched and temperature-sensitive responses at the network level. Thus, a clean abstraction is presented to the user. Fabricated in a 28-nm FDSOI process, Braindrop integrates 4096 neurons in 0.65 mm 2 .

Reference

https://web.stanford.edu/group/brainsinsilicon/neuromorphics.html

http://www.human-memory.net/ | The Human Memory - what it is, how it works and how it can go wrong

http://www.human-memory.net/processes_storage.html | Memory Storage - Memory Processes - The Human Memory

https://www.semanticscholar.org/paper/Foundations-of-computational-neuroscience-Piccinini-Shagrir/d01b28fb22346bea00b053b8ccbd00ffc202ccf0/figure/0 | Figure 1 from Foundations of https://www.scientificamerican.com/article/computers-vs-brains/?redirect=1

computational neuroscience - Semantic Scholar

https://en.wikipedia.org/wiki/Dennard_scaling#Breakdown_of_Dennard_scaling_around_2006 | Dennard scaling - Wikipedia

http://jackterwilliger.com/biological-neural-network-synapses/ | Synapses, (A Bit of) Biological Neural Networks – Part II

https://en.wikipedia.org/wiki/Von_Neumann_architecture | Von Neumann architecture - Wikipedia

https://books.google.it/books?hl=it&lr=&id=_eDICgAAQBAJ&oi=fnd&pg=PR5&dq=Bert+Kappen+neuromorphic&ots=vO-WQ-2b91&sig=0qSWaafR2Dv2Dm0QCJAqHyEkKFU#v=onepage&q=Bert Kappen neuromorphic&f=false | Modeling Language, Cognition And Action - Proceedings Of The Ninth Neural ... - Google Libri

https://en.wikichip.org/wiki/intel/loihi | Loihi - Intel - WikiChip

https://en.wikichip.org/wiki/neuromorphic_chip | Neuromorphic Chip - WikiChip

https://en.wikipedia.org/wiki/Moore%27s_law | Moore's law - Wikipedia

https://en.wikipedia.org/wiki/Hebbian_theory | Hebbian theory - Wikipedia

http://www.lescienze.it/news/2014/08/11/news/chip_reti_neurali_cervello_consumi_ridotti_efficienza_truenorth-2245681/ | TrueNorth, il chip a basso consumo che imita le reti cerebrali - Le Scienze

https://www.slideshare.net/Funk98/end-of-moores-law-or-a-change-to-something-else | End of Moore's Law?

https://mms.businesswire.com/media/20150617006169/en/473143/5/2272063_Moores_Law_Graphic_Page_14.jpg | 2272063_Moores_Law_Graphic_Page_14.jpg (JPEG Image, 2845 × 2134 pixels) - Scaled (44%)

https://www.techopedia.com/definition/32953/neuromorphic-computing | What is Neuromorphic Computing? - Definition from Techopedia

http://www.messagetoeagle.com/artificial-intelligence-super-turing-machine-imitates-human-brain/ | Artificial Intelligence: Super-Turing Machine Imitates Human Brain | MessageToEagle.com

https://www.quora.com/If-the-human-brain-were-a-computer-it-could-perform-38-thousand-trillion-operations-per-second-The-world’s-most-powerful-supercomputer-BlueGene-can-manage-only-002-of-that-But-we-cannot-perform-like-a-supercomputer-Why | 'If the human brain were a computer, it could perform 38 thousand trillion operations per second. The world’s most powerful supercomputer, BlueGene, can manage only .002% of that.' But, we cannot perform like a supercomputer. Why? - Quora

about:reader?url=https%3A%2F%2Fwww.nbcnews.com%2Fsciencemain%2Fhuman-brain-may-be-even-more-powerful-computer-thought-8C11497831 | Human brain may be even more powerful computer than thought

https://www.scientificamerican.com/article/computers-vs-brains/?redirect=1 | Computers versus Brains - Scientific American

https://www.youtube.com/results?search_query=go+artificial | go artificial - YouTube

https://www.youtube.com/watch?v=g-dKXOlsf98 | The computer that mastered Go - YouTube

https://www.youtube.com/watch?v=TnUYcTuZJpM | Google's Deep Mind Explained! - Self Learning A.I. - YouTube

https://cacm.acm.org/magazines/2019/4/235577-neural-algorithms-and-computing-beyond-moores-law/fulltext#R33 | Neural Algorithms and Computing Beyond Moore's Law | April 2019 | Communications of the ACM

https://medium.com/@thomas.moran23/the-amazing-neuroscience-and-physiology-of-learning-1247d453316b | The Amazing Neuroscience and Physiology of Learning